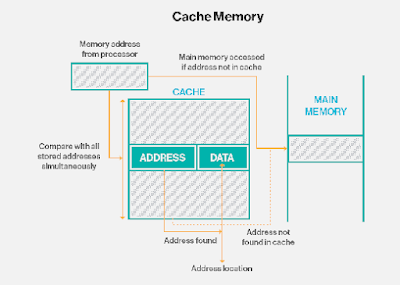

Cache memory is a high speed memory that temporarily stores data and instructions.

Direct Mapping divides memory into Three fields: Tag, block and word while Fully Associative Mapping divides memory into two fields: Tag and Word.

Fully Associative Mapping is more complex than Direct Mapping as direct Mapping is simplest technique.

Since, Associative Mapping complex it requires hardware for mapping, it is expensive than Direct Mapping.

Search time is less in Direct Mapping as each cache line contains only one block. While Fully Associative Mapping takes more search time as it has to check for each tag.

Cache mapping: Technique used to map main memory into cache memory.

There are different types of Cache Mapping:

Direct Mapping: It is a mapping technique which maps each block of memory into a single cache line.

There are different types of Cache Mapping:

Direct Mapping: It is a mapping technique which maps each block of memory into a single cache line.

Fully Associative Mapping: mapping technique which maps blocks of main memory into any cache line.

Comparing Direct Mapping and Associative Mapping:

Direct Mapping maps block into a specific cache line using a formula while Fully Associative Mapping maps block into any cache line.

Direct Mapping maps block into a specific cache line using a formula while Fully Associative Mapping maps block into any cache line.

Direct Mapping divides memory into Three fields: Tag, block and word while Fully Associative Mapping divides memory into two fields: Tag and Word.

Fully Associative Mapping is more complex than Direct Mapping as direct Mapping is simplest technique.

Since, Associative Mapping complex it requires hardware for mapping, it is expensive than Direct Mapping.

Search time is less in Direct Mapping as each cache line contains only one block. While Fully Associative Mapping takes more search time as it has to check for each tag.

Difference between Program Counter and Register $0

The main difference between a program counter and a memory address register is that a program counter points to the next instruction to be executed / fetched, whereas a memory address register points to a memory location where the program being run would fetch data but not instructions. Furthermore, there are other distinctions between a program counter and a register. After the current instruction is executed, the program counter (PC) saves the address of the next instruction to be fetched from memory. The following address is stored into the address register (AR) from PC: AR<—PC In addition, the program counter is incremented to the address of the next instruction: PC<—PC+1. The Memory Address Register (MAR) stores the address of where data will be fetched from in order to be loaded into a CPU's register component. The address of data being accessed by a load or store instruction could be stored in the Memory Address Register (MAR).

In case of 64-bit and 128-bit architecture what would be the increment in memory address for sequential instruction execution: Because software must control the actual memory addressing hardware, switching from a 64-bit to a 128-bit architecture is a significant change. Most operating systems must be heavily updated to take use of the new architecture. Older 64-bit software may be supported either because the 128-bit instruction set is a superset of the 64-bit instruction set, allowing processors that support the 128-bit instruction set to also run code for the 64-bit instruction set, or through software emulation. Those 128-bit architectures' operating systems often handle both 64-bit and 128-bit programs. Some 128-bit programs, such as encoders, decoders, and encryption software, gain considerably from 128-bit registers, while the performance of other programs, such as 3D graphics-oriented programs, is unaffected. Memory-mapped files are becoming more difficult to implement in 64-bit architectures as files larger than 8 GiB become more common; such large files cannot be easily memory-mapped to 64-bit architectures because only a portion of the file can be mapped into the address space at a time, and to access such a file by memory mapping, the portions mapped must be swapped into and out of the address space as needed. This is a problem because memory mapping is one of the most efficient disk-to-memory methods when properly implemented by the operating system.