Here we will discuss the basic differences between the maximum a posteriori (MLA) and maximum-likelihood estimates (MLE) of the parameter vector in a linear regression model.

In probability theory, the maximum likelihood estimate (MLE) of a parameter is the value of that parameter that maximizes probability, where probability is a function of the parameter and is really equal to the probability of the data conditional on that parameter.

Maximum a posteriori (MAP) estimate is defined as the value of the parameter that maximizes the whole posterior distribution of the parameter under consideration (which is calculated using the likelihood). A mode of the posterior distribution estimate (MAP estimate) is the mode of the posterior distribution.

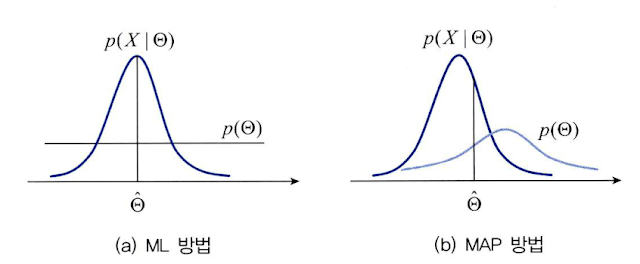

It is important to note that if the previous distribution we were assuming was constant, there is no difference between the MLE and the MAP estimates.

When computing the likelihood function, the data and model parameters are both inputs. To think about the likelihood function's role in this way is natural: given a constant value for the model parameters, what is the chance of any specific data point or data set occurring in the data set?

What if you maintain the data constant (that is, it has already been seen) while allowing the settings to vary? Given such a scenario, you might use the likelihood function to determine the likelihood (rather than the probability, since it is not normalized) of a given parameter value. When it comes to the second situation, the maximum likelihood estimate (MLE) is just the mode of the likelihood.

When using MLE, the issue is that it overfits the data, resulting in a large variance of the parameter estimates or, to put it another way, high sensitivity of the parameter estimate to random changes in the data set (which becomes pathological with small amounts of data). MLE regularisation is often beneficial in dealing with this issue (i.e., reduce variance by introducing bias into the estimate). Assuming that the parameters themselves are likewise taken from a random process (as well as the data) allows for this regularisation to be accomplished in maximum a posteriori (MAP). What this random process looks like is determined by the previous ideas about the parameters.

While the model's prior beliefs about the parameters are determined by design, it is interesting to note that if the prior beliefs are strong, then the observed data have relatively little impact on the parameter estimates (i.e., low variance but high bias), whereas if the prior beliefs are weak, then the outcome is more like standard MLE (low variance but moderate bias) (i.e., low bias but high variance). Thus, for an infinite quantity of data, MAP yields the same result as MLE (as long as the prior belief persists across parameter space); for an indefinitely weak prior belief (i.e., a uniform prior), MAP yields the same result as MLE (as long as the prior belief persists throughout parameter space).

Tags:

Education